Fluent dreaming for language models (AI interpretability method)

My coworkers at Confirm Labs and I recently posted a paper on fluent dreaming for language models! arXiv link. We have a companion page here demonstrating the code. We also have a demo Colab notebook here.

Dreaming, aka “feature visualization,” is a interpretability approach popularized by DeepDream that involves optimizing the input of a neural network to maximize an internal feature like a neuron’s activation. We adapt dreaming to language models.

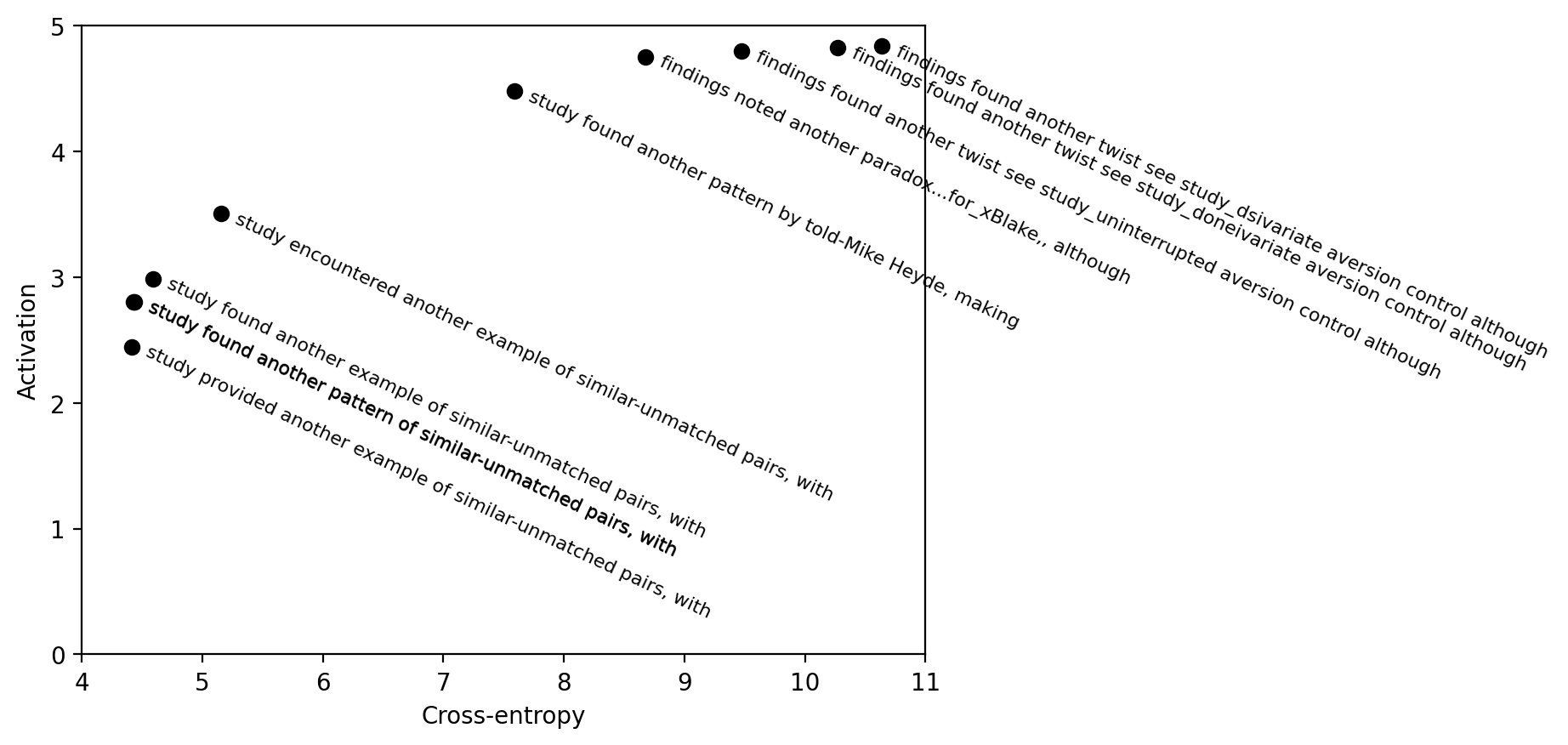

Past dreaming work almost exclusively works with vision models because the inputs are continuous and easily optimized. Language model inputs are discrete and hard to optimize. To solve this issue, we adapted techniques from the adversarial attacks literature (GCG, Zou et al 2023). Our algorithm, Evolutionary Prompt Optimization (EPO), optimizes over a Pareto frontier of activation and fluency:

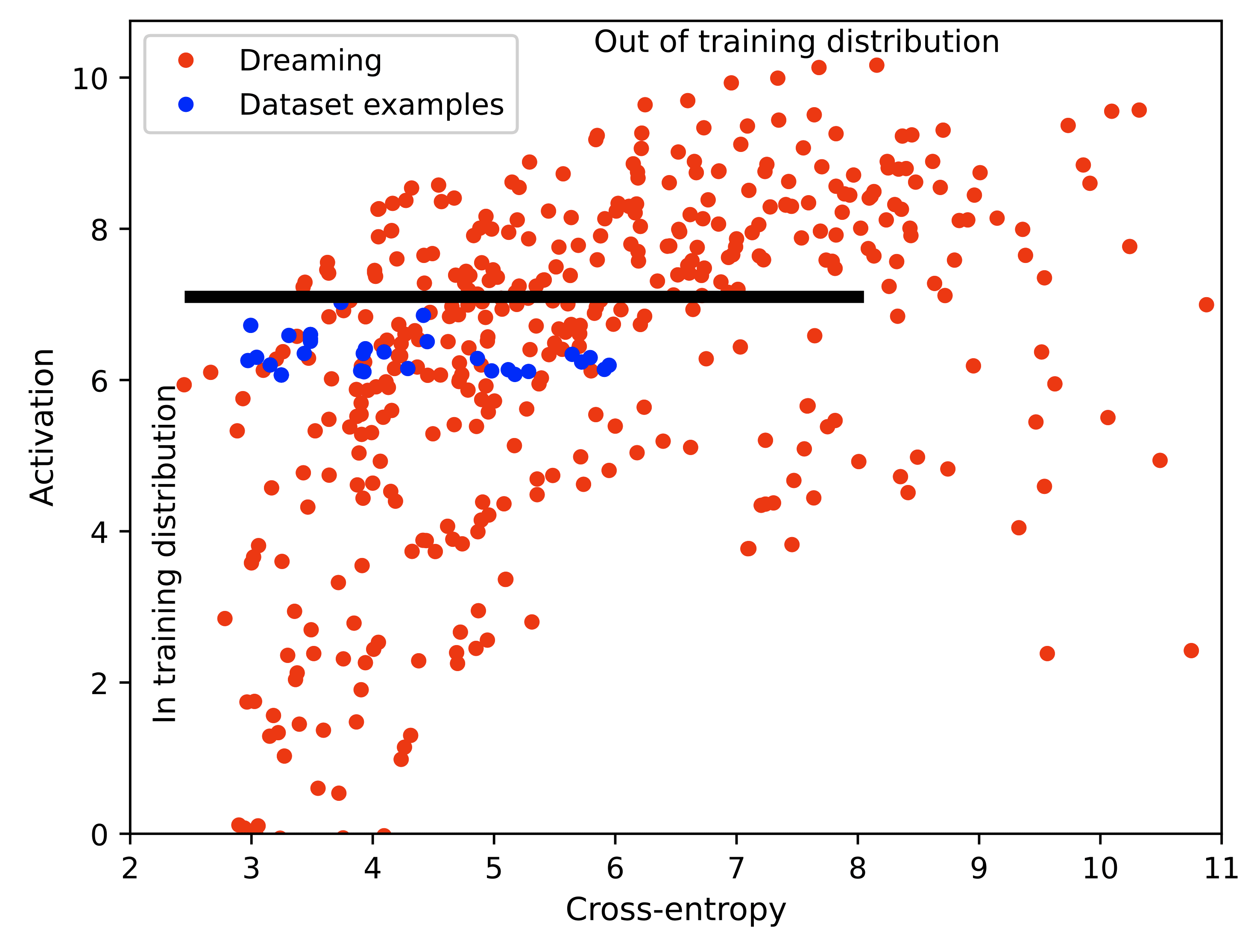

In the paper, we compare dreaming with max-activating dataset examples, demonstrating that dreaming achieves higher activations and similar perplexities to the training set. Dreaming is especially exciting because some mildly out-of-distribution prompts can reveal details of a circuit. For example, Pythia-12B layer 10, neuron 5 responds very strongly to “f. example”, “f.ex.” and “i.e” but responds even more strongly to “example f.ie.”, a phrase the model has probably never seen in training.

Like max-activating dataset examples, language model dreams will be hard to interpret in the face of polysemanticity. We would be excited about applying dreaming to more monosemantic feature sets resulting from dictionary learning/sparse autoencoders.

We also think algorithms like EPO will also be useful for fluent algorithmic redteaming. We are working on that now!